This post is a little different than our normal posts. Normally, we post about how to do something. However, this post is about failure and what we learned in the process. In our previous post, we created a basic chess engine. My next step was to improve the engine. I took a number of approaches to analysis of my engine that did not work. In this post, I will walk through what I did and what I should have done instead.

I am not disappointed by my failure. My failure was actually really interesting. I enjoyed learning and experimenting. Of course, it is better when the learning and experimentation comes with success, but that is not always the case. There is a part of me that wanted to keep working on this. However, I forced myself to write what I have done both for my own memory and in case it helps anyone else.

I split this post into two parts. First, I will explain what I did wrong. Second, I will talk about the tools that I created. The tools have value even if my hypothesis did not work.

The Wrong Path

Once I had the basic engine working, I wanted to use data analysis to improve the engine. In particular, the weighting that I chose for each factor was relatively random. Therefore, I decided to apply my engine to chess master games.

I will walk through my process below (I had fun building the tools), but before I do that, let me explain why this was a bad idea.

Analyzing Chess Master Games with the TurtleBot Chess Engine

I wanted to evaluate existing games using my board position scoring algorithm (TurtleBot). The hypothesis was that if my model was accurate, there would be a correlation between the higher scored board positions and the eventual winner. These correlations would then help me improve my weighting factors.

I started by downloading a lot of games and took a random board position from the game. I then evaluated the board position based on my model and compared how strong or weak I thought the board position was against who eventually won the game.

I was disappointed when none of my factors were correlated to the final result. Putting the factors together, my model was only slightly better than 50% at predicting games. However, I realized that this is because the games are extremely close at any given point in the game. I don’t know if this is true, but someone once told me that chess masters only make 1-2 mistakes per game (including both blunders and tactical errors). If you could consistently classify a game as a win or loss in the middle, then the game is probably extremely one sided.

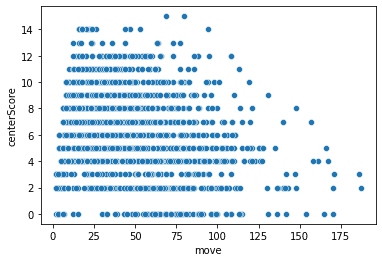

Therefore, I should not have used the game analysis to predict which positions are winners or losers. Instead, I should have considered it for tactics. In other words, I don’t care whether a particular player won or lost the game. I treat everyone as a winner and find ways to make my bot’s board look like their board. I switched to analyzing the evaluated score and plotting by the move. For example, here is the evaluation of the score of holding the center of the board (giving 3 points for the center four squares, 2 points for the 12 squares bordering the center, and 1 point for pieces that were attacking the center four squares).

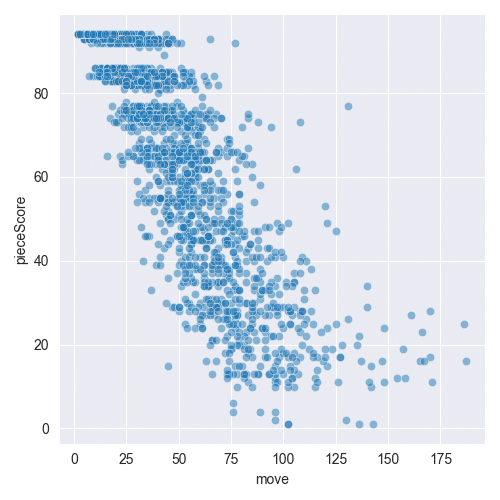

Similarly, we can plot the piece score against the moves.

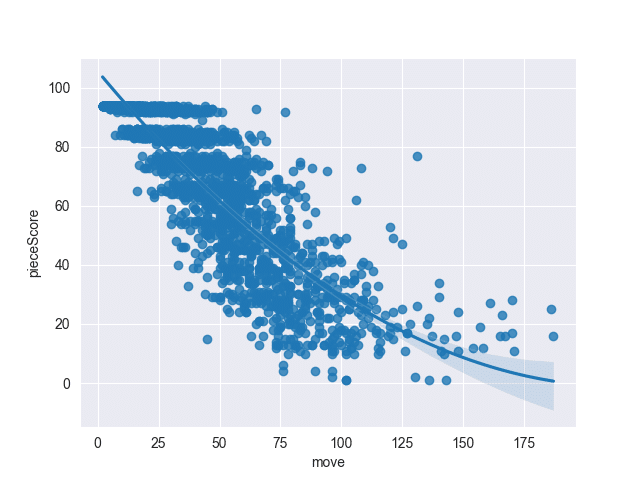

You can see that there is some correlation here (non-linear). We can even create a regression line.

I attempted to use this regression equation as the weight in my calculations. However, the results were much worse than my previous weighting.

Start with Your Own Problems

I could have started much more simply. After building the tools and analyzing the chess master games, I looked at my bot’s own games. It was horrible. I pulled random board positions (normal distribution around mean number of plys). I then used Lichess to check various positions. Lichess uses stockfish to analyze a position. It can tell which side has the advantage. This was particularly useful. Using this analysis, I was surprised that my bot’s board positions were so bad. In every case, the game was practically already lost. In most cases, the opponent was up by double digits (or at least high single digit). In one game, the opponent had an advantage of 8, and my bot recommended the move to sacrifice the queen for a knight with no tactical advantage in sight! Therefore, I realized that I need to work more fundamentally on my model.

When is the REPL not a REPL?

Third, in my analysis, I processed thousands of chess games. I placed the chess games and the random positions in a JSON file. I then used Python and Pandas to analyze the games. I am very much an amateur at data science. One of the things I love about Python is the REPL. When you don’t know how best to proceed, it is easy and fast to use the REPL to prototype some ideas. However, as the prototype becomes more complex, it becomes less efficient to use the REPL. For example, if you keep doing the same thing over and over, obviously you write a function. However, writing even a simple function in the REPL can be difficult (syntax errors anyone?). Re-writing the function when you discover a way to improve is never fun.

Enter Jupyter Notebook. I should have thought of this earlier. Jupyter Notebook is like a super REPL. You get the same ability to run code quickly. At the same time, you can easily make changes and re-run the code. Finally, and maybe most valuable for data analysis, you can keep notes in markdown as well as the plots that you create.

Creating Chess Engine Analysis Tools

Now that I have explained my mistakes, I can walk through my approach and the tools I built. Despite not achieving what I intended to, I created some useful tools for analyzing chess games (at a simplistic level). I also learned a lot in the process. The tools are available on our Github page in our TurtleBot chess project.

Show me the Files

For the analysis, I needed a lot of PGN files. I started with chess.com. However, I could only download one game at a time. I needed many more. Therefore, I found PGN Mentor, which allows downloading a large number of files by player name.

The downside to PGN Mentor was the fact that all games for each chess master/grandmaster were in one file. Therefore, I needed to create a script to convert into individual files. These files were then fed into another script that reads a file and extracts a random move from the file. We create a json file that has key information:

- FEN,

- Current player

- Who won the game

- Current move number

- Total move numbers

This particular part of the process relied on a Python chess engine I had created several years ago that could read a PGN file and replay the game from the file.

As a future improvement, I can combine those two steps (e.g., process a single file with multiple games and pick a random move) into a a JSON file.

Analysis Function

Once we have the JSON files with random moves, we need to feed into our evaluation algorithm. Therefore, I created a separate C# command line project that opens the JSON file, evaluates each position, and writes the scores back to a JSON file. We are finally ready for analysis.

Although I would prefer to work in Python, this part of the process needed to be in C#. There are two reasons for this: (1) my chess bot evaluation logic is already written in C# and (2) the logic relies on API that is provided by the chess challenge. I did not want to rewrite either my evaluation logic or the API in Python. Therefore, I stayed with C#.

Below is an example of the JSON file output:

[

{

"FEN": "Q7/2p1k3/8/1p1P2p1/p7/1P6/P3K2q/8 w b3 1 55",

"totalMoves": 128,

"move": 108,

"winner": "b",

"centerScore": 3,

"centerAttackScore": 0,

"oppAttackScore": 0,

"slidingEdgeScore": -1,

"oppCenterScore": 0,

"pieceScore": 23,

"oppPieceScore": 24,

"rookScore": 0,

"checkmateScore": -5,

"totalScore": 21,

"nextTurn": "w",

"unprotectedScore": 3

},

{

"FEN": "1r3r2/1p3pk1/2b2bp1/P2pp2p/2BP3P/4BP2/1P1R2PK/R7 b h6 0 31",

"totalMoves": 118,

"move": 73,

"winner": "b",

"centerScore": 10,

"centerAttackScore": 4,

"oppAttackScore": 4,

"slidingEdgeScore": 0,

"oppCenterScore": 9,

"pieceScore": 56,

"oppPieceScore": 56,

"rookScore": 0,

"checkmateScore": 0,

"totalScore": 66,

"nextTurn": "b",

"unprotectedScore": 2

}

]Next Steps

I am not done improving my bot. Basically after two weeks of work, I was back where I started. My bot was no better than it was when I first created it. I think I can still improve now that I know what I am looking for. The next tool I want to create is one that allows me to enter a board position, a proposed move, and a stockfish move to see why the stockfish move scored lower than my move.