Welcome back to the PyMinMaximus series! In Part 1, we built a complete chess board representation with legal move generation. Our engine can now understand chess positions and generate all possible moves, but it doesn’t know how to think about chess.

In this post, we’ll implement the core algorithms that allow PyMinMaximus to look ahead, evaluate positions, and choose the best moves. By the end, you’ll have an engine that can actually play chess!

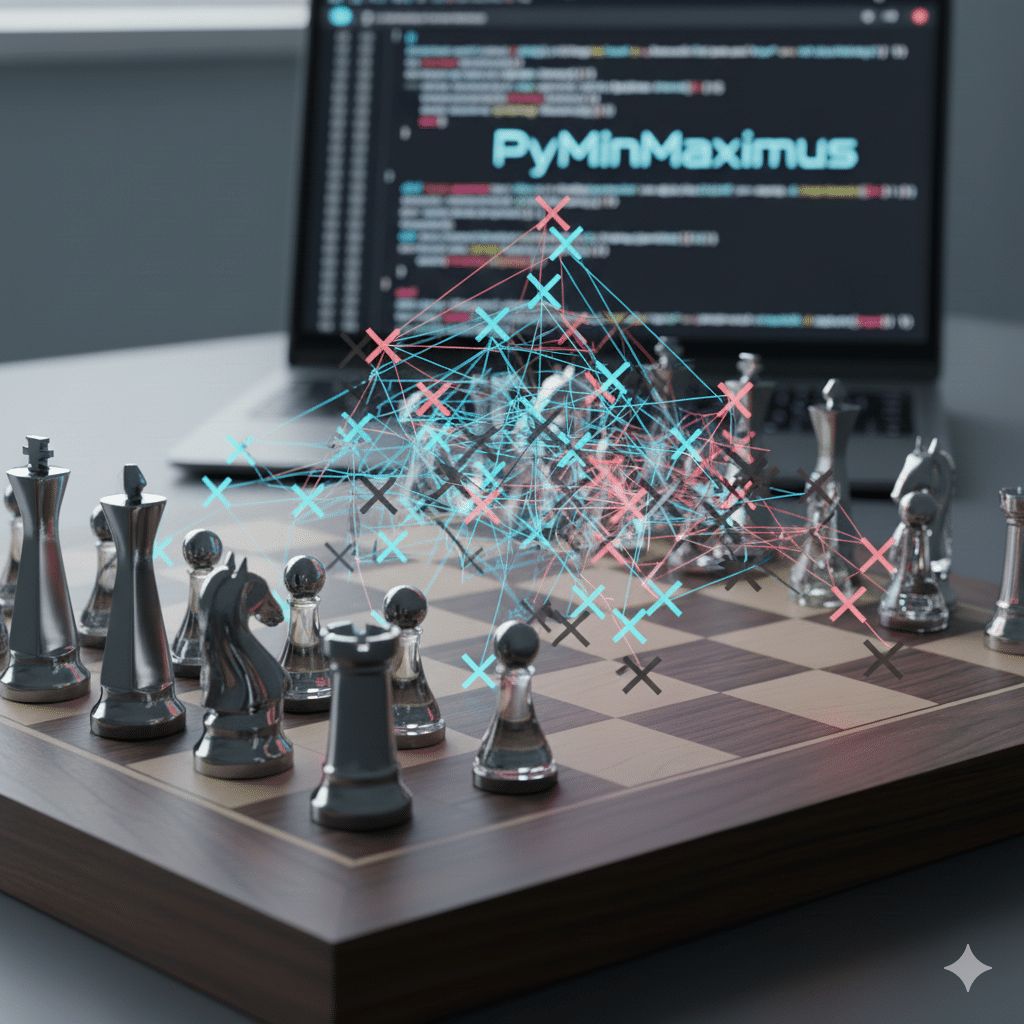

Understanding Game Trees

Before we write any code, let’s understand what happens when a chess engine “thinks.”

What is a Game Tree?

A game tree is a way of visualizing all possible sequences of moves in a game. Each node in the tree represents a position, and each edge represents a move:

Current Position

/ | \

Move 1 Move 2 Move 3

/ | \

Reply 1 Reply 2 Reply 3

In chess, the tree is enormous. From the starting position:

- Depth 1 (1 ply): 20 possible moves

- Depth 2 (2 ply): 400 possible positions

- Depth 3 (3 ply): 8,902 possible positions

- Depth 4 (4 ply): 197,281 possible positions

This exponential growth is called the branching factor (roughly 35 in chess). We can’t search the entire tree, so we search to a fixed depth and evaluate the resulting positions.

Ply vs. Moves

Important terminology:

- 1 ply = one half-move (one player’s turn)

- 1 move = two plies (both players make a move)

When we say “search to depth 4,” we mean 4 plies, or 2 full moves.

The Minimax Algorithm

Minimax is the foundation of game-playing AI. The idea is elegant:

- Maximizing player (us): We want to maximize our evaluation score

- Minimizing player (opponent): They want to minimize our score

We assume both players play optimally. At each level of the tree:

- Our turn: Choose the move with the highest evaluation

- Opponent’s turn: They’ll choose the move with the lowest evaluation (worst for us)

Minimax in Pseudocode

function minimax(position, depth, maximizingPlayer):

if depth == 0 or game is over:

return evaluate(position)

if maximizingPlayer:

maxEval = -infinity

for each move in position:

eval = minimax(move, depth - 1, false)

maxEval = max(maxEval, eval)

return maxEval

else:

minEval = +infinity

for each move in position:

eval = minimax(move, depth - 1, true)

minEval = min(minEval, eval)

return minEvalBuilding a Simple Evaluation Function

Before implementing minimax, we need a way to evaluate positions. For now, we’ll use material count – simply adding up the value of our pieces.

Traditional piece values:

- Pawn = 100

- Knight = 320

- Bishop = 330

- Rook = 500

- Queen = 900

- King = 20000 (effectively infinite)

We can implement this in Python with the following Evaluator class.

# evaluation.py

from constants import *

class Evaluator:

def __init__(self):

self.piece_values = {

PAWN: 100,

KNIGHT: 320,

BISHOP: 330,

ROOK: 500,

QUEEN: 900,

KING: 20000

}

def evaluate(self, board):

"""

Evaluate the position from White's perspective.

Positive = good for White, Negative = good for Black

"""

score = 0

for row in range(8):

for col in range(8):

piece = board.board[row][col]

if piece == EMPTY:

continue

piece_type = piece & 7

color = piece & 24

value = self.piece_values[piece_type]

if color == WHITE:

score += value

else:

score -= value

return score

def evaluate_relative(self, board):

"""

Evaluate from the perspective of the side to move.

Positive = good for side to move

"""

score = self.evaluate(board)

return score if board.to_move == WHITE else -scoreThis simple evaluator just counts material. A position where White is up a queen would score about +900.

Implementing Minimax

Now let’s implement the minimax algorithm:

# search.py

from evaluation import Evaluator

class SearchEngine:

def __init__(self, board, evaluator=None):

self.board = board

self.evaluator = evaluator if evaluator else Evaluator()

self.nodes_searched = 0

def minimax(self, depth, maximizing_player):

"""

Basic minimax search algorithm.

Returns the evaluation score for the current position.

"""

self.nodes_searched += 1

# Base case: reached maximum depth or game over

if depth == 0:

return self.evaluator.evaluate_relative(self.board)

moves = self.board.generate_legal_moves()

# Checkmate or stalemate

if len(moves) == 0:

if self.board.is_in_check(self.board.to_move):

# Checkmate - return a score that's worse the sooner it happens

return -20000 + (10 - depth) # Prefer longer defense

else:

# Stalemate

return 0

if maximizing_player:

max_eval = float('-inf')

for move in moves:

undo_info = self.board.make_move(move)

eval_score = self.minimax(depth - 1, False)

self.board.unmake_move(move, undo_info)

max_eval = max(max_eval, eval_score)

return max_eval

else:

min_eval = float('inf')

for move in moves:

undo_info = self.board.make_move(move)

eval_score = self.minimax(depth - 1, True)

self.board.unmake_move(move, undo_info)

min_eval = min(min_eval, eval_score)

return min_eval

def find_best_move(self, depth):

"""

Find the best move at the root of the search tree.

"""

self.nodes_searched = 0

best_move = None

best_eval = float('-inf')

moves = self.board.generate_legal_moves()

if len(moves) == 0:

return None

for move in moves:

undo_info = self.board.make_move(move)

# We're always maximizing at root (from our perspective)

eval_score = self.minimax(depth - 1, False)

self.board.unmake_move(move, undo_info)

if eval_score > best_eval:

best_eval = eval_score

best_move = move

return best_move, best_evalLet’s test this basic minimax:

# Test minimax

from board import Board

from search import SearchEngine

board = Board()

engine = SearchEngine(board)

print("Searching for best move at depth 4...")

best_move, score = engine.find_best_move(4)

print(f"Best move: {best_move}")

print(f"Evaluation: {score}")

print(f"Nodes searched: {engine.nodes_searched:,}")This works, but it’s slow! Even at depth 4, we’re searching more than 200,000 positions. We need optimization.

Alpha-Beta Pruning: Making Minimax Fast

Alpha-beta pruning is a brilliant optimization. The key insight: we can skip searching branches that can’t possibly affect the final decision.

The Concept

Imagine you’re choosing a restaurant.

- Restaurant A: You check reviews and it’s rated 4 stars

- Restaurant B: First review is 1 star

You don’t need to read more reviews of Restaurant B. You are safer choosing Restaurant A, which has all reviews 4 stars or higher. It is possible that Restaurant B has a 5 star review, but it doesn’t matter because you don’t know whether you would get the 1 star experience or the 5 star experience. Therefore, you would choose Restaurant A. You can prune the search.

Alpha-beta works the same way:

- Alpha: The best value the maximizing player can guarantee

- Beta: The best value the minimizing player can guarantee

If we find that a branch can’t improve on what we’ve already found, we skip it.

Alpha-Beta in Code

class SearchEngine:

# ... previous code ...

def alphabeta(self, depth, alpha, beta, maximizing_player):

"""

Minimax with alpha-beta pruning.

Alpha: best value for maximizer found so far

Beta: best value for minimizer found so far

"""

self.nodes_searched += 1

if depth == 0:

return self.evaluator.evaluate_relative(self.board)

moves = self.board.generate_legal_moves()

if len(moves) == 0:

if self.board.is_in_check(self.board.to_move):

return -20000 + (10 - depth)

else:

return 0

if maximizing_player:

max_eval = float('-inf')

for move in moves:

undo_info = self.board.make_move(move)

eval_score = self.alphabeta(depth - 1, alpha, beta, False)

self.board.unmake_move(move, undo_info)

max_eval = max(max_eval, eval_score)

alpha = max(alpha, eval_score)

# Beta cutoff

if beta <= alpha:

break # Prune remaining moves

return max_eval

else:

min_eval = float('inf')

for move in moves:

undo_info = self.board.make_move(move)

eval_score = self.alphabeta(depth - 1, alpha, beta, True)

self.board.unmake_move(move, undo_info)

min_eval = min(min_eval, eval_score)

beta = min(beta, eval_score)

# Alpha cutoff

if beta <= alpha:

break # Prune remaining moves

return min_eval

def find_best_move_alphabeta(self, depth):

"""

Find the best move using alpha-beta pruning.

"""

self.nodes_searched = 0

best_move = None

best_eval = float('-inf')

alpha = float('-inf')

beta = float('inf')

moves = self.board.generate_legal_moves()

if len(moves) == 0:

return None, 0

for move in moves:

undo_info = self.board.make_move(move)

eval_score = self.alphabeta(depth - 1, alpha, beta, False)

self.board.unmake_move(move, undo_info)

if eval_score > best_eval:

best_eval = eval_score

best_move = move

alpha = max(alpha, eval_score)

return best_move, best_evalMove Ordering: Amplifying Alpha-Beta

Alpha-beta pruning works better when we search good moves first. If we find the best move early, we can prune more branches.

Simple move ordering heuristics:

- Captures first (especially captures of valuable pieces)

- Checks (forcing moves)

- Other moves

class SearchEngine:

# ... previous code ...

def order_moves(self, moves):

"""

Order moves to improve alpha-beta pruning.

Better moves first = more pruning.

"""

def move_score(move):

score = 0

# Get the captured piece (if any)

captured = self.board.board[move.to_row][move.to_col]

if captured != EMPTY:

# MVV-LVA: Most Valuable Victim - Least Valuable Attacker

captured_value = self.evaluator.piece_values[captured & 7]

attacker = self.board.board[move.from_row][move.from_col]

attacker_value = self.evaluator.piece_values[attacker & 7]

# Prioritize capturing valuable pieces with cheap pieces

score += captured_value * 10 - attacker_value

# Prioritize promotions

if move.promotion:

score += 800

# Prioritize checks (we'll need to make the move to check this)

# For now, we'll skip this to keep it simple

return score

return sorted(moves, key=move_score, reverse=True)

def alphabeta(self, depth, alpha, beta, maximizing_player):

"""

Alpha-beta with move ordering.

"""

self.nodes_searched += 1

if depth == 0:

return self.evaluator.evaluate_relative(self.board)

moves = self.board.generate_legal_moves()

if len(moves) == 0:

if self.board.is_in_check(self.board.to_move):

return -20000 + (10 - depth)

else:

return 0

# Order moves for better pruning

moves = self.order_moves(moves)

if maximizing_player:

max_eval = float('-inf')

for move in moves:

undo_info = self.board.make_move(move)

eval_score = self.alphabeta(depth - 1, alpha, beta, False)

self.board.unmake_move(move, undo_info)

max_eval = max(max_eval, eval_score)

alpha = max(alpha, eval_score)

if beta <= alpha:

break

return max_eval

else:

min_eval = float('inf')

for move in moves:

undo_info = self.board.make_move(move)

eval_score = self.alphabeta(depth - 1, alpha, beta, True)

self.board.unmake_move(move, undo_info)

min_eval = min(min_eval, eval_score)

beta = min(beta, eval_score)

if beta <= alpha:

break

return min_evalTransposition Tables: Remembering What We’ve Seen

Chess positions can be reached through different move orders. A transposition table stores positions we’ve already evaluated so we don’t search them again.

class TranspositionTable:

def __init__(self, size_mb=64):

# Approximate number of entries based on memory

self.size = (size_mb * 1024 * 1024) // 100 # rough estimate

self.table = {}

def get_hash(self, board):

"""

Simple hash of the board position.

In a real engine, we'd use Zobrist hashing.

"""

return board.to_fen()

def store(self, board, depth, score, flag):

"""

Store a position evaluation.

flag: 'exact', 'lowerbound', or 'upperbound'

"""

if len(self.table) >= self.size:

# Simple replacement: clear oldest entries

self.table.clear()

hash_key = self.get_hash(board)

self.table[hash_key] = {

'depth': depth,

'score': score,

'flag': flag

}

def probe(self, board, depth, alpha, beta):

"""

Check if we've seen this position before.

Returns (found, score) tuple.

"""

hash_key = self.get_hash(board)

if hash_key not in self.table:

return False, 0

entry = self.table[hash_key]

# Only use if searched to equal or greater depth

if entry['depth'] < depth:

return False, 0

score = entry['score']

flag = entry['flag']

if flag == 'exact':

return True, score

elif flag == 'lowerbound':

if score >= beta:

return True, score

elif flag == 'upperbound':

if score <= alpha:

return True, score

return False, 0

class SearchEngine:

def __init__(self, board, evaluator=None):

self.board = board

self.evaluator = evaluator if evaluator else Evaluator()

self.nodes_searched = 0

self.tt = TranspositionTable()

def alphabeta(self, depth, alpha, beta, maximizing_player):

"""

Alpha-beta with transposition table.

"""

alpha_orig = alpha

# Check transposition table

tt_hit, tt_score = self.tt.probe(self.board, depth, alpha, beta)

if tt_hit:

return tt_score

self.nodes_searched += 1

moves = self.board.generate_legal_moves()

if depth == 0 or len(moves) == 0:

score = self.evaluator.evaluate(self.board)

return score

moves = self.order_moves(moves)

if maximizing_player:

max_eval = float('-inf')

for move in moves:

undo_info = self.board.make_move(move)

eval_score = self.alphabeta(depth - 1, alpha, beta, False)

self.board.unmake_move(move, undo_info)

max_eval = max(max_eval, eval_score)

alpha = max(alpha, eval_score)

if beta <= alpha:

break

# Store in transposition table

if max_eval <= alpha_orig:

flag = 'upperbound'

elif max_eval >= beta:

flag = 'lowerbound'

else:

flag = 'exact'

self.tt.store(self.board, depth, max_eval, flag)

return max_eval

else:

min_eval = float('inf')

for move in moves:

undo_info = self.board.make_move(move)

eval_score = self.alphabeta(depth - 1, alpha, beta, True)

self.board.unmake_move(move, undo_info)

min_eval = min(min_eval, eval_score)

beta = min(beta, eval_score)

if beta <= alpha:

break

# Store in transposition table

if min_eval <= alpha_orig:

flag = 'upperbound'

elif min_eval >= beta:

flag = 'lowerbound'

else:

flag = 'exact'

self.tt.store(self.board, depth, min_eval, flag)

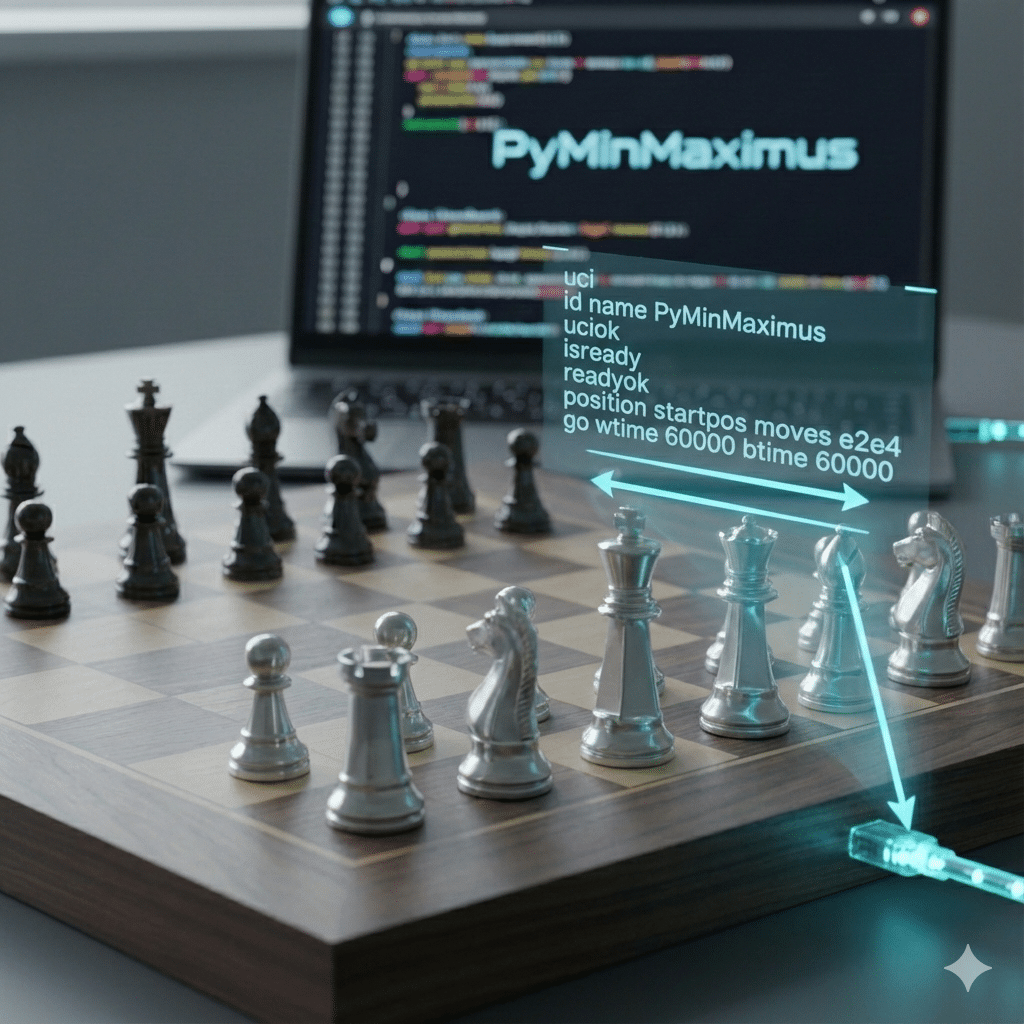

return min_evalIterative Deepening: Getting the Best of Both Worlds

Iterative deepening seems wasteful at first: search depth 1, then depth 2, then depth 3, etc. But it has huge benefits:

- Time management: We can stop at any time and have a move

- Move ordering: Information from shallow searches improves deep searches

- Minimal overhead: The deeper searches dominate the cost anyway

class SearchEngine:

# ... previous code ...

def iterative_deepening(self, max_depth, time_limit=None):

"""

Iteratively search to increasing depths.

"""

import time

start_time = time.time()

best_move = None

best_score = float('-inf')

for depth in range(1, max_depth + 1):

# Check time

if time_limit and (time.time() - start_time) > time_limit:

break

self.nodes_searched = 0

move, score = self.find_best_move_alphabeta(depth)

elapsed = time.time() - start_time

nps = self.nodes_searched / elapsed if elapsed > 0 else 0

print(f"Depth {depth}: {move} (score: {score}) "

f"[{self.nodes_searched:,} nodes in {elapsed:.2f}s, "

f"{nps:,.0f} nps]")

if move:

best_move = move

best_score = score

# Stop if we found a mate

if abs(score) > 19000:

break

return best_move, best_scoreTesting: Tactical Puzzles

Let’s test PyMinMaximus on some tactical puzzles:

def test_tactics():

"""Test the engine on tactical puzzles."""

from board import Board

from search import SearchEngine

puzzles = [

{

'name': 'Mate in 1',

'fen': 'r1bqkb1r/pppp1Qpp/2n2n2/4p3/2B1P3/8/PPPP1PPP/RNB1K1NR b KQkq - 0 4',

'solution': None # Black is already mated

},

{

'name': 'Back Rank Mate',

'fen': '6k1/5ppp/8/8/8/8/5PPP/3R2K1 w - - 0 1',

'solution': 'd1d8' # Rd8#

},

{

'name': 'Queen Fork',

'fen': 'r1bqkb1r/pppp1ppp/2n5/4p3/2B1n3/5N2/PPPPQPPP/RNB1K2R w KQkq - 0 1',

'solution': 'e2e5' # Qxe5+ wins the knight

},

{

'name': 'Smothered Mate',

'fen': '6rk/6pp/7N/8/8/8/8/R3K3 w - - 0 1',

'solution': 'h6f7' # Nf7#

}

]

for puzzle in puzzles:

print(f"\n{'='*60}")

print(f"Puzzle: {puzzle['name']}")

print(f"FEN: {puzzle['fen']}")

print('='*60)

board = Board()

board.from_fen(puzzle['fen'])

print(board)

engine = SearchEngine(board)

best_move, score = engine.iterative_deepening(6, time_limit=5.0)

if best_move:

print(f"\nEngine's choice: {best_move}")

if puzzle['solution']:

if str(best_move) == puzzle['solution']:

print("✓ CORRECT!")

else:

print(f"✗ Expected: {puzzle['solution']}")

else:

print("No legal moves (checkmate or stalemate)")

if __name__ == "__main__":

test_tactics()Performance Comparison

Let’s compare our optimizations:

def benchmark():

"""Compare search algorithms."""

from board import Board

from search import SearchEngine

import time

board = Board()

# Test position: complex middlegame

board.from_fen("r1bq1rk1/ppp2ppp/2np1n2/2b1p3/2B1P3/2NP1N2/PPP2PPP/R1BQ1RK1 w - - 0 8")

depths = [3, 4, 5]

for depth in depths:

print(f"\n{'='*60}")

print(f"Depth {depth}")

print('='*60)

# Basic minimax (without alpha-beta)

engine1 = SearchEngine(board)

engine1.tt.table.clear() # Disable TT

start = time.time()

move1, score1 = engine1.find_best_move(depth)

time1 = time.time() - start

nodes1 = engine1.nodes_searched

print(f"Basic Minimax: {nodes1:,} nodes in {time1:.2f}s "

f"({nodes1/time1:,.0f} nps)")

# With alpha-beta

engine2 = SearchEngine(board)

engine2.tt.table.clear() # Disable TT

start = time.time()

move2, score2 = engine2.find_best_move_alphabeta(depth)

time2 = time.time() - start

nodes2 = engine2.nodes_searched

print(f"Alpha-Beta: {nodes2:,} nodes in {time2:.2f}s "

f"({nodes2/time2:,.0f} nps)")

print(f"Improvement: {nodes1/nodes2:.1f}x fewer nodes, "

f"{time1/time2:.1f}x faster")

# With TT

engine3 = SearchEngine(board)

start = time.time()

move3, score3 = engine3.find_best_move_alphabeta(depth)

time3 = time.time() - start

nodes3 = engine3.nodes_searched

print(f"Alpha-Beta+TT: {nodes3:,} nodes in {time3:.2f}s "

f"({nodes3/time3:,.0f} nps)")

print(f"Improvement: {nodes1/nodes3:.1f}x fewer nodes, "

f"{time1/time3:.1f}x faster vs basic")

if __name__ == "__main__":

benchmark()Expected results at depth 5:

- Basic minimax: ~3-5 million nodes

- Alpha-beta: ~300k-500k nodes (10x improvement)

- Alpha-beta + TT: ~100k-200k nodes (20-30x improvement)

What We’ve Accomplished

In this post, we’ve built the thinking engine for PyMinMaximus:

✅ Minimax algorithm for optimal move selection

✅ Alpha-beta pruning for 10x+ speedup

✅ Move ordering for better pruning

✅ Transposition tables to avoid redundant searches

✅ Iterative deepening for time management

✅ Tactical puzzle solving at depth 5-6

PyMinMaximus can now play chess! It’s not strong yet – our evaluation function only counts material – but it understands tactics and can find forced wins.

Limitations and Next Steps

Our current engine has some weaknesses:

- Positional blindness: It only sees material, not position quality

- Horizon effect: It can’t see beyond the search depth

- No opening knowledge: Wastes time “thinking” in book positions

- No endgame tablebase: Can’t play simple endgames perfectly

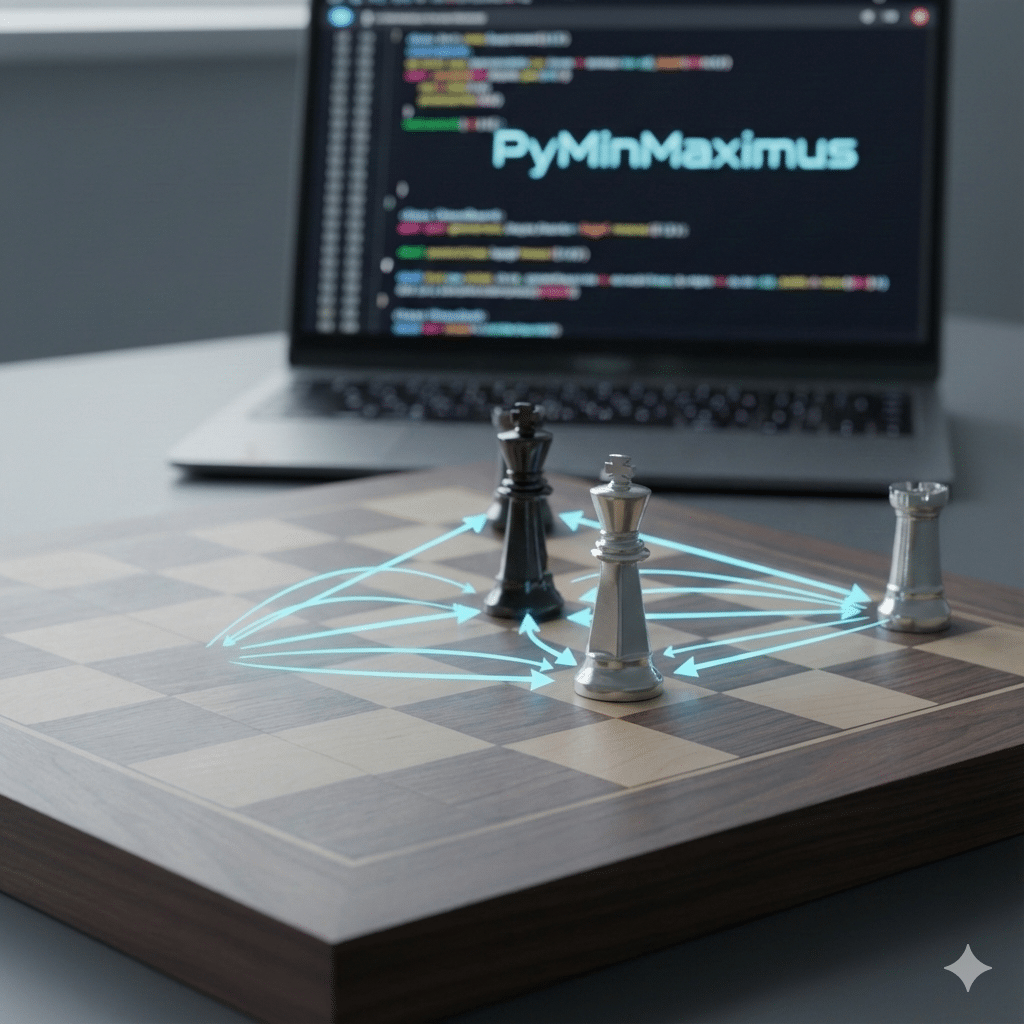

In Part 3, we’ll address the first issue by building a sophisticated evaluation function that understands:

- Piece-square tables for positional play

- Pawn structure (doubled, isolated, passed pawns)

- King safety

- Piece mobility and activity

- Endgame vs. middlegame evaluation

Stay tuned as PyMinMaximus learns the finer points of chess strategy! Missed part 1, click here to learn the basics of building a board and move generation.

Questions or comments? Let me know below! The complete code is available at our GitHub repository. Sign for for our newsletter to be alerted to new posts and to get behind the scenes looks at what we are working on next.